Introduction

You may have read about how AI (Artificial Intelligence) is rapidly transforming the education sector, bringing exciting opportunities. It’s true that AI offers personalised learning experiences, enhanced accessibility, and the potential for streamlining processes to save valuable time. Educators and students can greatly benefit from these advancements. However, it’s crucial to embrace AI responsibly, ensuring the safety and well-being of the school community.

By thoughtfully integrating this evolving technology, we can unlock a future where learning is more engaging, inclusive, and effective, empowering students to reach their full potential while supporting educators in their vital roles.

Our Safe AI course has been developed in partnership with AI experts Educate Ventures Research (EVR).

If you are the Designated Safeguarding Lead (DSL) or another leader in your setting with responsibility for safeguarding, pastoral care and/or technology implementation and use, our comprehensive Safeguarding AI for Leaders (SAIL) course will ensure that you have the confidence and understanding to lead on safe and ethical AI use in your setting.

Our Safe AI course

- Who is it for? Designated Safeguarding Leads (DSLs), pastoral leaders, and other school leaders.

- What will you learn? Develop and lead safe and ethical AI policies, audit safeguarding procedures, identify priority projects, develop a comprehensive action plan and ensure compliance.

- Benefits: Gain confidence, access resources and templates, ensure compliance, learn from real-life stories, network with peers, receive ongoing support for a year.

- Duration: 4-5 hours of self-paced online study with 12 months of access to the library of resources.

Course details

Safeguarding AI for Leaders (SAIL)

Course content

The course will focus around five key themes:

- Safeguarding – Adapting our approach for AI

- Safeguarding 2.0 – Exploring new threats

- Ethics

- Security and regulation

- Practical implementation

Safeguarding – Adapting our approach for AI

- Examine existing safeguarding practices and explore where we need to adapt our approach in order to respond to threats and concerns enhanced by the use of AI.

- Explore issues such as fake news, deep fake images and audio spoofing and consider how to respond to these digital threats.

- Build on existing knowledge of inappropriate tools and activity online and highlight how AI has impacted the use of inappropriate tools.

- Safeguard against mis-use of AI tools in the hands of young people and broaden understanding of how harmful online practices can be intensified by AI.

Safeguarding 2.0 – Exploring new threats

- Get to grips with new safeguarding concerns introduced by the use of AI systems such as a lack of agency for students.

- Plan for how to mitigate against the harmful effects of bias within AI systems and the data they are trained on.

- Explore the consequences of a lack of transparency within machine learning systems.

- Understand how over-reliance on technology and increasingly sophisticated AI tools could encourage young people to misplace their trust in Artificial Intelligence.

Ethics

- Maintain a focus on the digital rights of young people and ensure they are protected from over-profiling in a data-heavy environment.

- Underpin decision-making with an awareness of how learners and teachers could become deskilled through the adoption of AI tools.

- Consider how to balance the side-effects of over-reliance on technology with leveraging AI for good.

- Ensure that AI implementation serves to improve equity rather than expand existing disadvantage gaps.

Security and regulation

- Safeguard student data and ensure personal data is protected by all stakeholders when engaging with AI systems.

- Ensure AI use is compliant with statutory requirements, for example, from KCSIE and Prevent as well as UK government AI regulations.

- Develop an understanding of the importance of safeguarding intellectual property.

- Explore existing AI legislation and the implications for educational settings.

Practical implementation

- Draw the four main themes together and apply key concepts to your individual context.

- Re-examine existing policies in light of AI safeguarding issues discussed.

- Formulate a strategy for the safe and ethical implementation of AI across the school community.

- Build relationships with colleagues to support the safe use of AI in schools.

How will you benefit?

This course will benefit you and your setting in a wide variety of ways:

- Gain confidence by developing a well-informed approach to AI use, by identifying potential risks and plans to help mitigate them through training and active discussion.

- Have access to a range of structured templates and frameworks which will enable you to audit your setting, identify priorities and create action plans for change.

- Ask advice from an AI expert and gain feedback on your action plan during your cohort’s ‘Live Lab’ session.

- Understand the latest legislation and ensure your setting is compliant, receiving guidance from AI experts like Professor Rose Luckin and drawing on evidence-informed safeguarding practice.

- Learn from the experience of others by watching and listening to real-life stories from other DSLs and educators in schools.

- Network, collaborate and share best practice with peers online for the full 12 months, as well as during your ‘Live Lab’ session.

- Receive ongoing support for a full year, including a library of resources which will be updated on an ongoing basis and active conversations in the community areas, ensuring your knowledge keeps-up with developments in this sector.

- Scale your ‘safe AI’ practices by rolling out training and support for staff across your setting.

Why choose this course?

This course provides you with the knowledge and skills to develop and lead safe and ethical AI policies and practices, which meet all relevant rules and regulations, within your setting with confidence.

You will:

- Audit and improve your safeguarding policies and procedures around AI usage.

- Identify priority projects in your setting to ensure a greater whole-school understanding of the risks of AI and how to safeguard against these.

- Engage stakeholder groups, including staff and students, to discuss and implement safe and ethical AI use.

You’ll have the opportunity to meet online with one of our experienced tutors to gain feedback and advice on your assignments and action plan, as well as access to the online course and resources for a 12 month period. This will enable you to refresh your knowledge, as well as access the discussion boards and course resources, while you become an effective and confident AI safeguarding leader.

Who is this course designed for?

This course is designed for Designated Safeguarding Leads (DSLs) in schools and other educational settings. Pastoral leaders and members of the Senior Leadership Team or other roles with responsibility for safeguarding and/or technology use in the setting, e.g. IT managers may also find this course useful.

How is the course delivered?

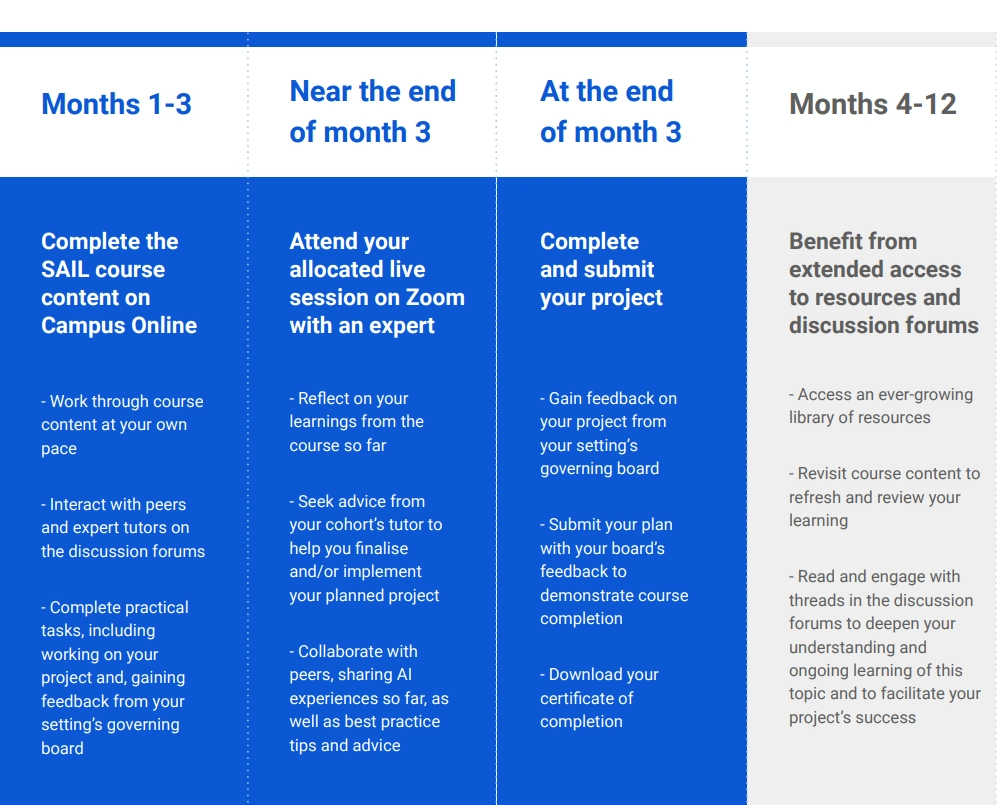

- The course will be available to study at your own pace on our online learning platform, Campus Online.

- You will have access to a wide range of engaging and interactive content and resources including articles, videos, case studies and discussion forums.

- You will benefit from completing a number of practical tasks to embed your learning and implement improved policies and procedures in your setting.

- You will have the opportunity to attend a ‘Live Lab’ session online in which you will meet with an expert tutor and peers to discuss your project work and AI experiences in school so far.

- The team of expert AI tutors is available to further support your learning journey through the collaborative and informative online discussion threads. Best practice sharing with this team and your peers is encouraged to get the most out of the course.

How will you be assessed?

During the course, delegates will complete the following:

- A self-assessment at the start of the course to determine the setting’s current practice and policy regarding safe AI use

- Short practical tasks throughout

- An action plan, using a template provided, which should be shared with stakeholders in your setting for feedback. This can then be used to update and improve the setting’s policies and/or practice regarding the safe use of AI tools and systems.

Near the end of your first three months on the course, you will attend a ‘Live Lab’ session to discuss your learning and the practical work you have completed so far.

Upon submission of your action plan at the three month mark, you will be able to download a completion certificate and continue to benefit from access to the course and all of its resources.

Are you eligible?

To complete this course, you will need to be based in an educational setting with some responsibility for leading (or co-leading) safeguarding and/or technology use.

When does the course start?

The next cohort dates are as follows:

| Cohort start date | Live Lab date* | Project submission deadline |

| 3 Nov 2025 | 19 Jan 2026 | 3 Feb 2026 |

| 5 Jan 2026 | 23 March 2026 | 6 April 2026 |

| 23 Feb 2026 | 11 May 2026 | 25 May 2026 |

* The exact date for the live session will be confirmed at the start of your cohort.

Book now via our online booking form to secure your place.

How much does the course cost?

£395 + VAT

You may wish to consider a package for your school or MAT to train multiple staff members. Please contact us for a bespoke quote or see our package options.

How long does it take to complete the course?

You will complete 4-5 hours of learning and the practical activities within the first 3 months of access, with a live session near the end of the 3 months to support you in finalising your project.

Once you have completed the course and downloaded your certificate, you will continue to have access to the course content and resource library, and will be able to watch, download and engage in the discussion forums for a period of 12 months